We are getting a lot of requests about how to deploy OpenStack in proof of concept (PoC) or production environments as, let’s face it, setting up an OpenStack infrastructure from scratch without the aid of a deployment tool is not particularly suitable for faint at heart newcomers 🙂

DevStack, a tool that targets development environments, is still very popular for building proof of concepts as well, although the results can be quite different from deploying a stable release version. Here’s an alternative that provides a very easy way to get OpenStack up and running, using the latest OpenStack stable release.

RDO and Packstack

RDO is an excellent solution to go from zero to a fully working OpenStack deployment in a matter of minutes.

- RDO is simply a distribution of OpenStack for Red Hat Enterprise Linux (RHEL), Fedora and derivatives (e.g.: CentOS).

- Packstack is a Puppet based tool that simplifies the deployment of RDO.

There’s quite a lot of documentation about RDO and Packstack, but mostly related to so called all-in-one setups (one single server), which are IMO too trivial to be considered for anything beyond the most basic PoC, let alone a production environment. Most real OpenStack deployments are multi-node, which is quite natural given the highly distributed nature of OpenStack.

Some people might argue that the reason for limiting the efforts to all-in-one setups is reasonably mandated by the available hardware resources. Before taking a decision in that direction, consider that you can run the scenarios described in this post entirely on VMs. For example, I’m currently employing VMWare Fusion virtual machines on a laptop, nested hypervisors (KVM and Hyper-V) included. This is quite a flexible scenario as you can simulate as many hosts and networks as you need without the constraints that a physical environment has.

Let’s start with describing how the OpenStack Grizzly multi-node setup that we are going to deploy looks like.

Controller

This is the OpenStack “brain”, running all Nova services except nova-compute and nova-network, plus quantum-server, Keystone, Glance, Cinder and Horizon (you can add also Swift and Ceilometer).

I typically assign 1GB of RAM to this host and 30GB of disk space (add more if you want to use large Cinder LVM volumes or big Glance images). On the networking side, only a single nic (eth0) connected to the management network is needed (more on networking soon).

Network Router

The job of this server is to run OpenVSwitch to allow networking among your virtual machines and the Internet (or any other external network that you might define).

Beside OpenVSwitch this node will run quantum-openvswitch-agent, quantum-dhcp-agent, quantum-l3-agent and quantum-metadata-proxy.

1 GB of RAM and 10GB of disk space are enough here. You’ll need three nics, connected to the management (eth0), guest data (eth1) and public (eth2) networks.

Note: If you run this node as a virtual machine, make sure that the hypervisor’s virtual switches support promiscuous mode.

KVM compute node (optional)

This is one of the two hypervisors that we’ll use in our demo. Most people like to use KVM in OpenStack, so we are going to use it to run our Linux VMs.

The only OpenStack services required here are nova-compute and quantum-openvswitch-agent.

Allocate the RAM and disk resources for this node based on your requirements, considering especially the amount of RAM and disk space that you want to assign to your VMs. 4GB of RAM and 50GB of disk space can be considered as a starting point. If you plan to run this host in a VM, make sure that the virtual CPU supports nested virtualization. Two nics required, connected to the management (eth0) and guest data (eth1) networks.

Hyper-V compute node (optional)

Micrsosoft Hyper-V Server 2012 R2 is a great and completely free hypervisor, just grab a copy of the ISO from here. In the demo we are going to use it for running Windows instances, but beside that you can of course use it to run Linux or FreeBSD VMs as well. You can also grab a ready made OpenStack Windows Server 2012 R2 Evaluation image from here, no need to learn how to package a Windows OpenStack image today. Required OpenStack services here are nova-compute and quantum-hyperv-agent. No worries, here’s an installer that will take care of setting them up for you, make sure to download the stable Grizzly release.

Talking about resources to allocate for this host, the same consideration discussed for the KVM node apply here as well, just consider that Hyper-V will require 16GB-20GB of disk space for the OS itself, including updates. I usually assign 4GB of RAM and 60-80GB of disk. Two nics required here as well, connected to the management and guest data networks.

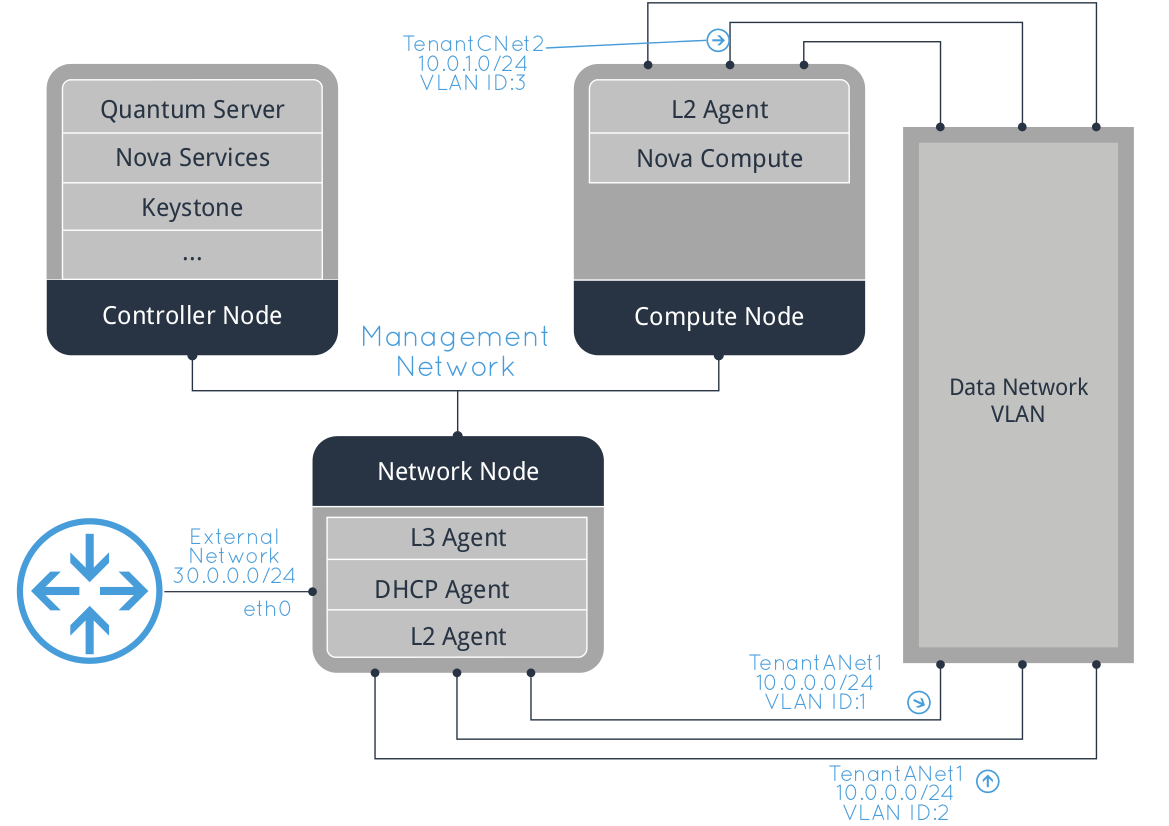

Networking

Let’s spend a few words about how the hosts are connected.

Management

This network is used for management only (e.g. running nova commands or ssh into the hosts). It should definitely not be accessible from the OpenStack instances to avoid any security issue.

Guest data

This is the network used by guests to communicate among each other and with the rest of the world. It’s important to note that although we are defining a single physical network, we’ll be able to define multiple isolated networks using VLANs or tunnelling on top of it. One of the requirements of our scenario is to be able to run groups of isolated instances for different tenants.

Public

Last, this is the network used by the instances to access external networks (e.g. the Internet) routed through the network host. External hosts (e.g. a client on the Internet) will be able to connect to some of your instances based on the floating ip and security descriptors configuration.

Hosts configuration

Just do a minimal installation and configure your network adapters. We are using CentOS 6.4 x64, but RHEL 6.4, Fedora or Scientific Linux images are perfectly fine as well. Packstack will take care of getting all the requirements as we will soon see.

Once you are done with the installation, updating the hosts with yum update -y is a good practice.

Configure your management adapters (eth0) with a static IP, e.g. by editing directly the ifcfg-eth0 configuration file in /etc/sysconfig/network-scripts. As a basic example:

|

1 2 3 4 5 6 |

DEVICE="eth0" ONBOOT="yes" BOOTPROTO="static" MTU="1500" IPADDR="10.10.10.1" NETMASK="255.255.255.0" |

General networking configuration goes in /etc/sysconfig/network, e.g.:

|

1 2 3 |

GATEWAY=10.10.10.254 NETWORKING=yes HOSTNAME=openstack-controller |

And add your DNS configuration in /etc/resolv.conf, e.g.:

|

1 2 |

nameserver 208.67.222.222 nameserver 208.67.220.220 |

Nics connected to guest data (eth1) and public (eth2) networks don’t require an IP. You also don’t need to add any OpenVSwitch configuration here, just make sure that the adapters get enabled on boot, e.g.:

|

1 2 3 4 |

DEVICE="eth1" BOOTPROTO="none" MTU="1500" ONBOOT="yes" |

You can reload your network configuration with:

|

1 |

service network restart |

Packstack

Once you have setup all your hosts, it’s time to install Packstack. Log in on the controller host console and run:

|

1 2 3 |

sudo yum install -y http://rdo.fedorapeople.org/openstack/openstack-grizzly/rdo-release-grizzly.rpm sudo yum install -y openstack-packstack yum install -y openstack-utils |

Now we need to create a so called “answer file” to tell Packstack how we want our OpenStack deployment to be configured:

|

1 |

packstack --gen-answer-file=packstack_answers.conf |

One useful point about the answer file is that it is already populated with random passwords for all your services, change them as required.

Here’s a script add our configuration to the answers file. Change the IP address of the network and KVM compute hosts along with any of the Cinder or Quantum parameters to fit your scenario.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

ANSWERS_FILE=packstack_answers.conf NETWORK_HOST=10.10.10.2 KVM_COMPUTE_HOST=10.10.10.3 openstack-config --set $ANSWERS_FILE general CONFIG_SSH_KEY /root/.ssh/id_rsa.pub openstack-config --set $ANSWERS_FILE general CONFIG_NTP_SERVERS 0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org,3.pool.ntp.org openstack-config --set $ANSWERS_FILE general CONFIG_CINDER_VOLUMES_SIZE 20G openstack-config --set $ANSWERS_FILE general CONFIG_NOVA_COMPUTE_HOSTS $KVM_COMPUTE_HOST openstack-config --del $ANSWERS_FILE general CONFIG_NOVA_NETWORK_HOST openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_L3_HOSTS $NETWORK_HOST openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_DHCP_HOSTS $NETWORK_HOST openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_METADATA_HOSTS $NETWORK_HOST openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_OVS_TENANT_NETWORK_TYPE vlan openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_OVS_VLAN_RANGES physnet1:1000:2000 openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_OVS_BRIDGE_MAPPINGS physnet1:br-eth1 openstack-config --set $ANSWERS_FILE general CONFIG_QUANTUM_OVS_BRIDGE_IFACES br-eth1:eth1 |

Now, all we have to do is running Packstack and just wait for the configuration to be applied, including dependencies like MySQL Server and Apache Qpid (used by RDO as an alternative to RabbitMQ). You’ll have to provide the password to access the other nodes only once, afterwards Packstack will deploy an SSH key to the remote ~/.ssh/authorized_keys files. As anticipated Puppet is used to perform the actual deployment.

|

1 |

packstack --answer-file=packstack_answers.conf |

At the end of the execution, Packstack will ask you to install a new Linux kernel on the hosts provided as part of RDO repository. This is needed because the kernel provided by RHEL (and thus CentOS) doesn’t support network namespaces, a feature needed by Quantum in this scenario. What Packstack doesn’t tell you is that the 2.6.32 kernel they provide will create a lot more issues with Quantum. At this point why not installing a modern 3.x kernel? 🙂

My suggestion is to skip altogether the RDO kernel and install the 3.4 kernel provided as part of the CentOS Xen project (which does not mean that we are installing Xen, we only need the kernel package).

Let’s update the kernel and reboot the network and KVM compute hosts from the controller (no need to install it on the controller itsef):

|

1 2 3 4 |

for HOST in $NETWORK_HOST $KVM_COMPUTE_HOST do ssh -o StrictHostKeychecking=no $HOST "yum install -y centos-release-xen && yum update -y --disablerepo=* --enablerepo=Xen4CentOS kernel && reboot" done |

At the time of writing, there’s a bug in Packstack that applies to multi-node scenarios where the Quantum firewall driver is not set in quantum.conf, causing failures in Nova. Here’s a simple fix to be executed on the controller (the alternative would be to disable the security groups feature altogether):

|

1 2 |

sed -i 's/^#\ firewall_driver/firewall_driver/g' /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini service quantum-server restart |

We can now check if everything is working. First we need to set our environment variables:

|

1 2 |

source ./keystonerc_admin export EDITOR=vim |

Let’s check the nova services:

|

1 |

nova-manage service list |

Here’s a sample output. If you see xxx in place of one of the smily faces it means that there’s something to fix 🙂

|

1 2 3 4 5 6 |

Binary Host Zone Status State Updated_At nova-conductor os-controller.cbs internal enabled :- ) 2013-07-14 19:08:17 nova-cert os-controller.cbs internal enabled :- ) 2013-07-14 19:08:19 nova-scheduler os-controller.cbs internal enabled :- ) 2013-07-14 19:08:17 nova-consoleauth os-controller.cbs internal enabled :- ) 2013-07-14 19:08:19 nova-compute os-compute.cbs nova enabled :- ) 2013-07-14 18:42:00 |

Now we can check the status of our Quantum agents on the network and KVM compute hosts:

|

1 |

quantum agent-list |

You should get an output similar to the following one.

|

1 2 3 4 5 6 7 8 |

+--------------------------------------+--------------------+----------------+-------+----------------+ | id | agent_type | host | alive | admin_state_up | +--------------------------------------+--------------------+----------------+-------+----------------+ | 5dff6900-4f6b-4f42-b7f1-f2842439bc4a | DHCP agent | os-network.cbs | :- ) | True | | 666a876f-6005-466b-9822-c31d48a5c9a8 | L3 agent | os-network.cbs | :- ) | True | | 8c190fc5-990f-494f-85c0-f3964639274b | Open vSwitch agent | os-compute.cbs | :- ) | True | | cf62892e-062a-460d-ab67-4440a790715d | Open vSwitch agent | os-network.cbs | :- ) | True | +--------------------------------------+--------------------+----------------+-------+----------------+ |

OpenVSwitch

On the network node we need to add the eth2 interface to the br-ex bridge:

|

1 |

ovs-vsctl add-port br-ex eth2 |

We can now check if the OpenVSwitch configuration has been applied correctly on the network and KVM compute nodes. Log in on the network node and run:

|

1 |

ovs-vsctl show |

The output should look similar to:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

f99276eb-4553-40d9-8bb0-bf3ac6e885e8 Bridge br-int Port br-int Interface br-int type: internal Port "int-br-eth1" Interface "int-br-eth1" Bridge "br-eth1" Port "eth1" Interface "eth1" Port "br-eth1" Interface "br-eth1" type: internal Port "phy-br-eth1" Interface "phy-br-eth1" Bridge br-ex Port br-ex Interface br-ex type: internal Port "eth2" Interface "eth2" ovs_version: "1.10.0" |

Notice the membership of eth1 to br-eth1 and eth2 to br-ex. If you don’t see them, we can just add them now.

To add a bridge, should br-eth1 be missing:

|

1 |

ovs-vsctl add-br br-eth1 |

To add the eth1 port to the bridge:

|

1 |

ovs-vsctl add-port br-eth1 eth1 |

You can now repeat the same procedure on the KVM compute node, considering only br-eth1 and eth1 (there’s no eth2).

What’s next?

Ok, enough for today. In the forthcoming Part 2 we’ll see how to add a Hyper-V compute node to the mix!

This is some good stuff! May i ask when part 2 will be made available?

Thanks! Part 2 is planned to be published this month. We are also collecting feedback from users, like features or issues they would like to be covered, etc

Very nice article guys! Proud to see Italians so deeply involved with Openstack!

Thanks!!

In terms of nationality, the company HQ offices are located in Timisoara (Romania), so Cloudbase is an Italian and Romanian “multinational” startup 🙂

Alessandro

We are always waiting for the Part 2

It’ll be ready soon 🙂

Great info. Great work. Please keep it up!

Can’t wait to see part 2!

Thanks, part 2 is coming really soon!

hi,

Many thanks for this excellent article, as it is very practical and useful.

If you allow, I have few suggestions/requests:

It will be nice to address the network topology with scenario addressing the bonding of physical interfaces on the host (mainly the Network Router), and building bond interfaces on top of them to accommodate sufficient number of VLANs which could be used between or exclusively for the VMs running on the hosts.

This is helpful, as most requirements need more VLANs to be accommodated, and soemtimes seperated as well. We cannot think of having similar number of physical interfaces available to separately managing these network.

I assume that the bond interfaces allow the creation of network bridge for each VLANs, and will give more flexibility.

Also, an approach with GRE tunnels for differentiating traffic (probably instead of using VLANs), and giving muscle to operate VMs across multiple hosts, and also refining connections between VMs running on different hosts, can be very interesting and truly helpful.

and, we all are waiting for your part-2 🙂

Thanks a ton for your efforts and help!

Hi Ashraf,

Thanks for your suggestions! Interface teaming / bonding will definitely be part of one of the next articles in this RDO series focused on fault tolerance and high availability.

About the VLANs, the provided example included 1000 VLAN tags, each can be assigned to a separate Quantum / Neutron network. This should cover most small / mid sized deployments in a multi tenant scenario, as guests on different networks won’t be able to see each other.

About GRE, we are not currently including it in the examples as interoperability among hypervisors is one of the goals of this series of posts. The port of OpenVSwitch to Windows i anyway progressing well and fast, so we’re going to add a post on RDO + GRE quite soon. 🙂

So we get a static route to the metadata service when we boot a windows vm

169.254.169.254 -> 10.240.45.42 (route to dhcp server ip)

If you browse in IE to 169.254.169.254 you see the metadata service

If you look in program files (x86) into the cloudbase-init stuff logs you’ll see the urls it tries to hit on boot, it tries urls then falls back to using “cached metadata”. As a result it doesn’t get admin passwords set through the horizon ui but instead sets its own random one and tries to paste it to the api.

The same hyperv node can boot that ubuntu vm from cloud base and get metadata fine from my metadata service.e’re running “isolated metadata” attached to our dhcp agent in neutron so that we can leverage our existing routers.

Looking at the code for cloudbase-init I don’t see any obvious reasons it wouldn’t work.

Also of note, once we go in through console and set a new Administrator password (not Admin user that cloudbase-init should change) networking is fully working and integrated between neutron ml2 and hyper-v. VM’s on linux kvm nodes can talk to the windows ones and vice-versa.

Hi Evin, can you please post the cloudbase-init log on a pastebin to better troubleshoot this issue?

Thanks,

Alessandro