This is the first blog post in a series about running mixed x86 and ARM64 Kubernetes clusters, starting with a general architectural overview and then moving to detailed step by step instructions.

Kubernetes doesn’t need any introduction at this point, as it became the de facto standard container orchestration system. If you, dear reader, developed or deployed any workload in the last few years, there’s a very high probability that you had to interact with a Kubernetes cluster.

Most users deploy Kubernetes via services provided by their hyperscaler of choice, typically employing virtual machines as the underlying isolation mechanism. This is a well proven solution, at the expense of an inefficient resource usage, causing many organizations to look for alternatives in order to reduce costs.

One solution consists in deploying Kubernetes on top of an existing on-premise infrastructure running a full scale IaaS solution like OpenStack, or a traditional virtualization solution like VMware, using VMs underneath. This is similar to what happens on public clouds, with the advantage of allowing users to mix legacy virtualized workloads with modern container based applications on top of the same infrastructure. It is a very popular option, as we see a rise in OpenStack deployments for this specific purpose.

But, as more and more companies are interested in dedicated infrastructure for their Kubernetes clusters, especially for Edge use cases, there’s no need for an underlying IaaS or virtualization technology that adds unnecessary complexity and performance limitations.

This is where deploying Kubernetes on bare metal servers really shines, as the clusters can take full advantage of the whole infrastructure, often with significant TCO benefits. Running on bare metal allows us to freely choose between the x64 and ARM64 architectures, or a combination of both, taking advantage of the lower energy footprint provided by ARM servers with the compatibility offered by the more common x64 architecture.

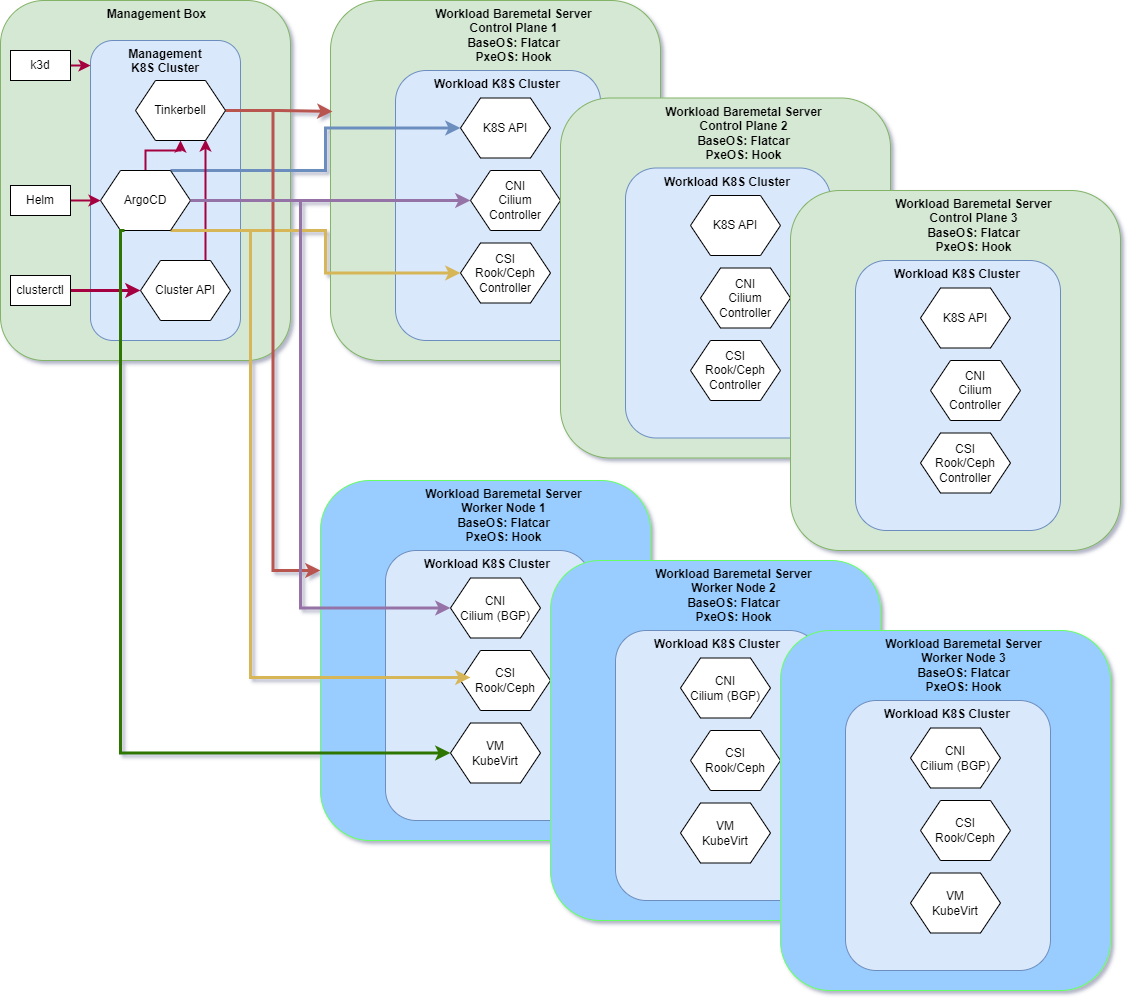

A Kubernetes infrastructure comes with non-trivial complexity, which requires a fully automated solution for deployment, management, upgrades, observability and monitoring. Here’s a brief list the key components in the solution that we are about to present.

Host operating system

When it comes to Linux there’s definitely no lack of options. We needed a Linux distro aimed at lean container infrastructure workloads, with a large deployment base on many different physical servers, avoiding a full fledged traditional Linux server footprint. We decided to use Flatcar, for a series of reasons:

- Longstanding (in cloud years) proven success, being the continuation of CoreOS

- CNCF incubating project

- Active community, expert in container scenarios, including Cloudbase engineers

- Support for both ARM64 and x64

- Commercial support, provided by Cloudbase, as a result of the partnership with Microsoft / Kinvolk

The host OS options are not limited to Flatcar, we tested successfully many other alternatives, including Mariner and Ubuntu. This is not trivial, as packaging and optimizing images for this sort of infrastructure requires significant domain expertize.

Bare metal host provisioning

This component allows to boot every host via IPMI (or other API provided by the BMC), install the operating system via PXE and in general configure every aspect of the host OS and Kubernetes in an automated way. Over the years we worked with many open source host provisioning solutions (MaaS, Ironic, Crowbar), but we opted for Thinkerbell in this case due to its integration in the Kubernetes ecosystem and support for Cluster API (CAPI).

Distributed Storage

Although the solution presented here can support traditional SAN storage, the storage model in our scenario will be distributed and hyperconverged, with every server providing compute, storage and networking roles. We chose Ceph (deployed via Rook), being the leading open source distributed storage and given our involvement in the community. When properly configured, it can deliver outstanding performance even on small clusters.

Networking and load balancing

While traditionally Kubernetes clusters employ Flannel or Calico for networking, a bare metal scenario can take advantage of a more modern technology like Cilium. Additionally, Cilium can provide load balancing via BGP out of the box, without the need for additional components like MetalLB.

High availability

All components in the deployment are designed with high availability in mind, including storage, networking, compute nodes and API.

Declarative GitOps

Argo CD offers a way to manage in a declarative way the whole CI/CD deployment pipeline. Other open source alternatives like Tekton or FluxCD can also be employed.

Observability and Monitoring

Last but not least, observability is a key area beyond simple logs, metrics and traces, to ensure that the whole infrastructure performs as expected, for which we employ Prometheus and Grafana. For monitoring, and ensuring that prompt actions can be taken in case of issues, we use Sentry.

Coming next

The next blog posts in this series will explain in detail how to deploy the whole architecture presented here, thanks for reading!