One might argue what is the point in running a cloud infrastructure software like OpenStack on top of another one, namely the Azure public cloud as in this blog post. The main use cases are typically testing and API compatibility, but as Azure nested virtualization and pass-through features came a long way recently in terms of performance, other more advanced use cases are viable, especially in areas where OpenStack has a strong user base (e.g. Telcos).

There are many ways to deploy OpenStack, in this post we will use Kolla Ansible for a containerized OpenStack with Ubuntu 20.04 Server as the host OS.

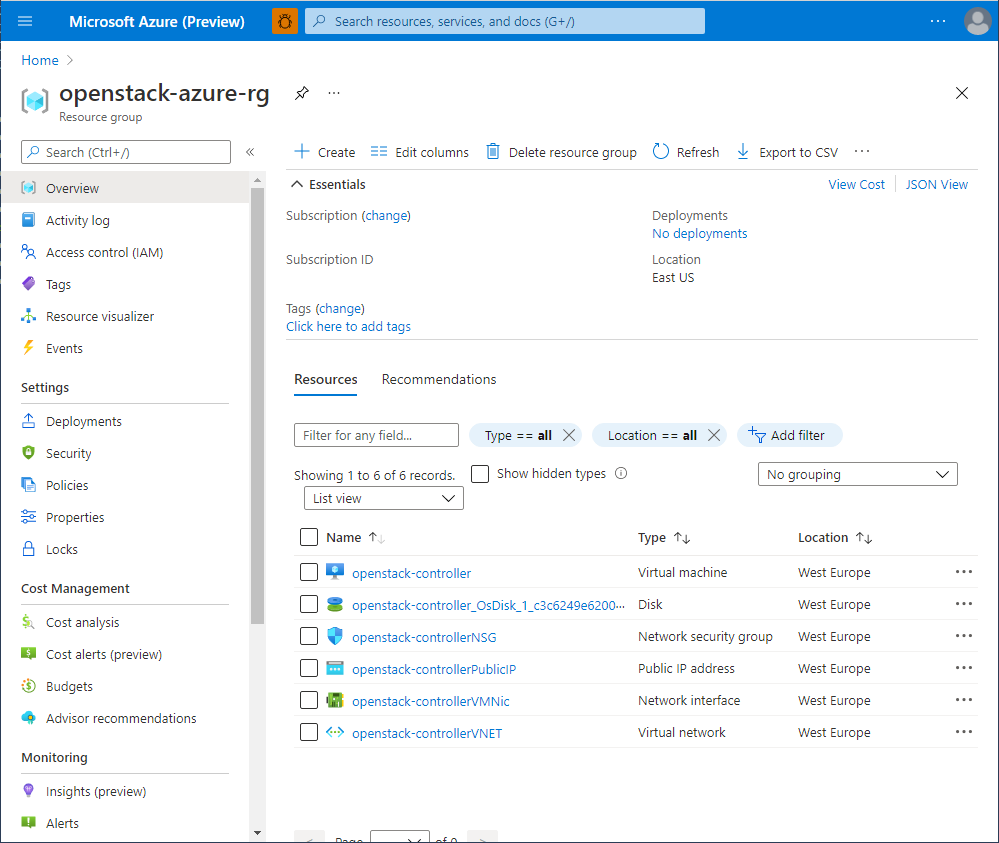

Preparing the infrastructure

In our scenario, we need at least one beefy virtual machine, that supports nested virtualization and can handle all the CPU/RAM/storage requirements for a full fledged All-In-One OpenStack. For this purpose, we chose a Standard_D8s_v3 size for the OpenStack controller virtual machine (8 vCPU, 32 GB RAM) and 512 GB of storage. For a multinode deployment, subject of a future post, more virtual machines can be added, depending on how many virtual machines are to be supported by the deployment.

To be able to use the Azure CLI from PowerShell, it can be installed following the instructions here https://docs.microsoft.com/en-us/cli/azure/install-azure-cli.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

# connect to Azure az login # create an ssh key for authentication ssh-keygen # create the OpenStack controller VM az vm create ` --name openstack-controller ` --resource-group "openstack-rg" ` --subscription "openstack-subscription" ` --image Canonical:0001-com-ubuntu-server-focal:20_04-lts-gen2:latest ` --location westeurope ` --admin-username openstackuser ` --ssh-key-values ~/.ssh/id_rsa.pub ` --nsg-rule SSH ` --os-disk-size-gb 512 ` --size Standard_D8s_v3 # az vm create will output the public IP of the instance $openStackControllerIP = "<IP of the VM>" # create the static private IP used by Kolla as VIP az network nic ip-config create --name MyIpConfig ` --nic-name openstack-controllerVMNic ` --private-ip-address 10.0.0.10 ` --resource-group "openstack-rg" ` --subscription "openstack-subscription" # connect via SSH to the VM ssh openstackuser@$openStackControllerIP # fix the fqdn # Kolla/Ansible does not work with *.cloudapp FQDNs, so we need to fix it sudo tee /etc/hosts << EOT $(hostname -i) $(hostname) EOT # create a dummy interface that will be used by OpenVswitch as the external bridge port # Azure Public Cloud does not allow spoofed traffic, so we need to rely on NAT for VMs to # have internal connectivity. sudo ip tuntap add mode tap br_ex_port sudo ip link set dev br_ex_port up |

OpenStack deployment

For the deployment, we will use the Kolla Ansible containerized approach.

Firstly, installation of the base packages for Ansible/Kolla/Cinder is required.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# from the Azure OpenStack Controller VM # install ansible/kolla requirements sudo apt install -y python3-dev libffi-dev gcc libssl-dev python3-venv net-tools # install Cinder NFS backend requirements sudo apt install -y nfs-kernel-server # Cinder NFS setup CINDER_NFS_HOST=$openStackControllerIP # Replace with your local network CIDR if you plan to add more nodes CINDER_NFS_ACCESS=$CINDER_NFS_HOST sudo mkdir /kolla_nfs echo "/kolla_nfs $CINDER_NFS_ACCESS(rw,sync,no_root_squash)" | sudo tee -a /etc/exports echo "$CINDER_NFS_HOST:/kolla_nfs" | sudo tee -a /etc/kolla/config/nfs_shares sudo systemctl restart nfs-kernel-server |

Afterwards, let’s install Ansible/Kolla in a Python virtualenv.

|

1 2 3 4 5 6 7 8 9 10 |

mkdir kolla cd kolla python3 -m venv venv source venv/bin/activate pip install -U pip pip install wheel pip install 'ansible<2.10' pip install 'kolla-ansible>=11,<12' |

Then, prepare Kolla configuration files and passwords.

|

1 2 3 4 5 |

sudo mkdir -p /etc/kolla/config sudo cp -r venv/share/kolla-ansible/etc_examples/kolla/* /etc/kolla sudo chown -R $USER:$USER /etc/kolla cp venv/share/kolla-ansible/ansible/inventory/* . kolla-genpwd |

Now, let’s check Ansible works.

|

1 |

ansible -i all-in-one all -m ping |

As a next step, we need to configure the OpenStack settings

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# This is the static IP we created initially VIP_ADDR=10.0.0.10 # Azure VM interface is eth0 MGMT_IFACE=eth0 # This is the dummy interface used for OpenVswitch EXT_IFACE=br_ex_port # OpenStack Train version OPENSTACK_TAG=11.0.0 # now use the information above to write it to Kolla configuration file sudo tee -a /etc/kolla/globals.yml << EOT kolla_base_distro: "ubuntu" openstack_tag: "$OPENSTACK_TAG" kolla_internal_vip_address: "$VIP_ADDR" network_interface: "$MGMT_IFACE" neutron_external_interface: "$EXT_IFACE" enable_cinder: "yes" enable_cinder_backend_nfs: "yes" enable_neutron_provider_networks: "yes" EOT |

Now it is time to deploy OpenStack.

|

1 2 3 |

kolla-ansible -i ./all-in-one prechecks kolla-ansible -i ./all-in-one bootstrap-servers kolla-ansible -i ./all-in-one deploy |

After the deployment, we need to create the admin environment variable script.

|

1 2 3 4 |

pip3 install python-openstackclient python-barbicanclient python-heatclient python-octaviaclient kolla-ansible post-deploy # Load the vars to access the OpenStack environment . /etc/kolla/admin-openrc.sh |

Let’s make the finishing touches and create an OpenStack instance.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# Set you external network CIDR, range and gateway, matching your environment, e.g.: export EXT_NET_CIDR='10.0.2.0/24' export EXT_NET_RANGE='start=10.0.2.150,end=10.0.2.199' export EXT_NET_GATEWAY='10.0.2.1' ./venv/share/kolla-ansible/init-runonce # Enable NAT so that VMs can have Internet access and be able to # reach their floating IP from the controller node. sudo ifconfig br-ex $EXT_NET_GATEWAY netmask 255.255.255.0 up sudo iptables -t nat -A POSTROUTING -s $EXT_NET_CIDR -o eth0 -j MASQUERADE # Create a demo VM openstack server create --image cirros --flavor m1.tiny --key-name mykey --network demo-net demo1 |

Conclusions

Deploying OpenStack on Azure is fairly straightforward, with the caveat that the OpenStack instances cannot be accessed from the Internet without further changes (this affects only inbound traffic, the OpenStack instances can access the Internet). Here are the main changes that we introduced to be able to perform the deployment in this scenario:

- Add a static IP on the first interface that will be used as the OpenStack API IP

- Set the OpenStack Controller FQDN to be the same as the hostname

- Create a dummy interface which will be used as the br-ex external port (there is no need for a secondary NIC, as Azure drops any spoofed packets)

- Add iptables NAT rules to allow OpenStack VM outbound (Internet) connectivity