Make sure to check out the previous blog post introducing Ceph on Windows, in case you’ve missed it. We are now going to look at how the performance looks like.

Before this Ceph Windows porting, the only way to access Ceph storage from Windows was by using the Ceph iSCSI gateway, which can easily become a performance bottleneck. Our goal was to outperform the iSCSI gateway and to get as close to the native Linux RBD throughput as possible.

Spoiler alert: we managed to surpass both!

Test environment

Before showing some actual results, let’s talk a bit about the test environment. We used 4 identical baremetal servers, having the following specs:

- CPU

- Intel(R) Xeon(R) E5-2650 @ 2.00GHz

- 2 sockets

- 8 cores per socket

- 32 vcpus

- memory

- 128GB

- 1333Mhz

- network adapters

- Chelsio T420-C

- 2 x 10Gb/s

- LACP bond

- 9000 MTU

You may have noticed that we are not mentioning storage disks, that’s because the Ceph OSDs were configured to use memory backed pools. Keep in mind that we’re putting the emphasis on Ceph client performance, not the storage iops on the Linux OSD side.

We used an all-in-one Ceph 16 cluster running on top of Ubuntu 20.04. On the client side, we covered Windows Server 2016, Windows Server 2019 as well as Ubuntu 20.04.

The benchmarks have been performed using the fio tool. It’s highly configurable, commonly used for Ceph benchmarks and most importantly, it’s cross platform.

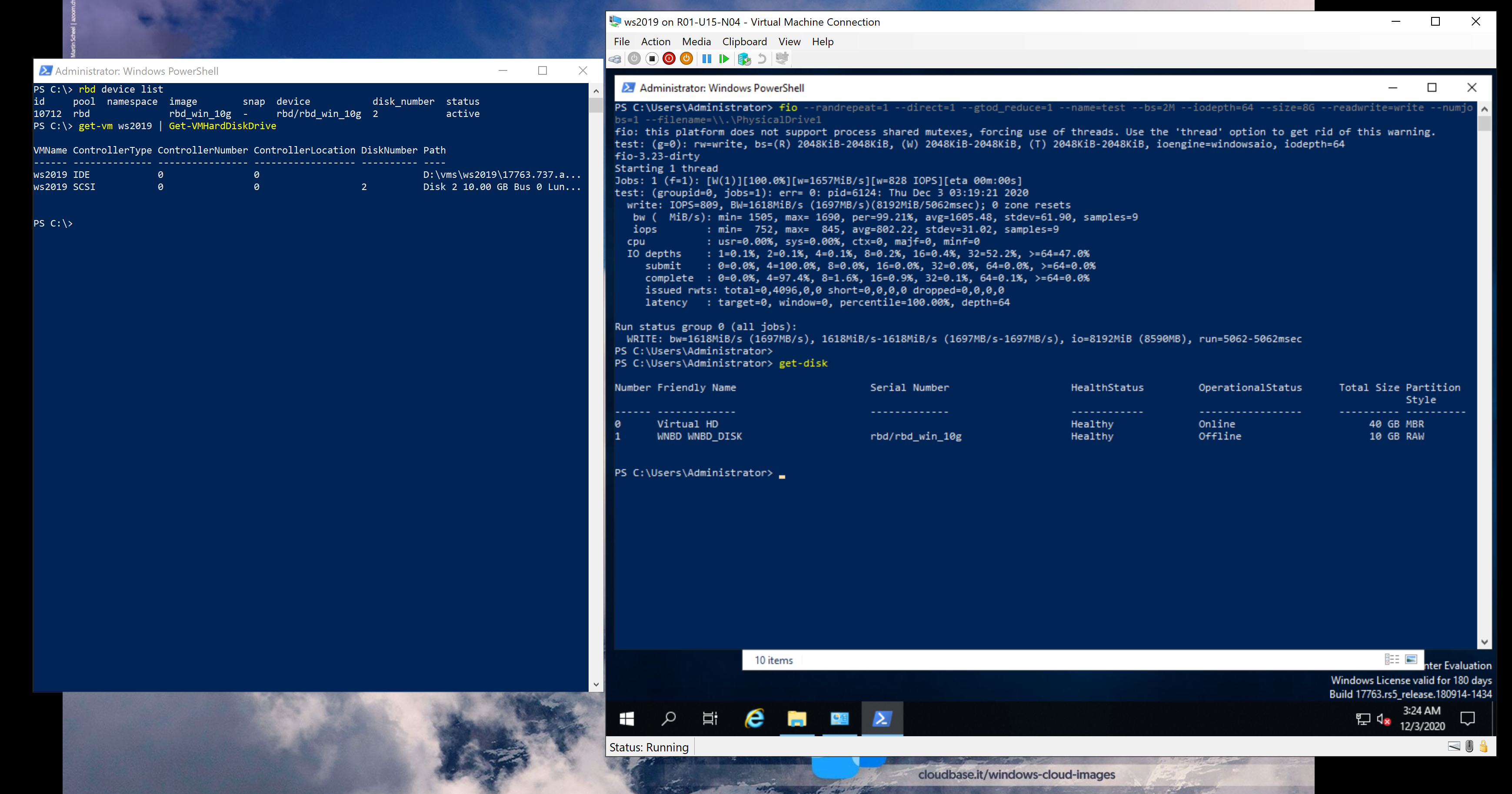

Below is a sample FIO command line invocation for anyone interested in repeating the tests. We are using direct IO, 2MB blocks, 64 concurrent asynchronous IO operations, testing various IO operations over 8GB chunks. When testing block devices, we are using the raw disk device as opposed to having an NTFS or ReFS partition. Note that this may require fio>=3.20 on Windows.

|

1 |

fio --randrepeat=1 --direct=1 --gtod_reduce=1 --name=test --bs=2M --iodepth=64 --size=8G --readwrite=randwrite --numjobs=1 --filename=\\.\PhysicalDrive2 |

Windows tuning

The following settings can improve IO throughput:

- Windows power plan – few people expect this to be a concern for severs, but by default the “high performance” power plan is not enabled by default, which can lead to CPU throttling

- Adding the Ceph and FIO binaries to the Windows Defender whitelist

- Using Jumbo frames – we’ve noticed a 15% performance improvement

- Enabling the CUBIC TCP congestion algorithm on Windows Server 2016

Test results

Baremetal RBD and CephFS IO

Let’s get straight to the RBD and CephFS benchmark results. Note that we’re using MB/s for measuring speed (higher is better).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

+-----------+------------+--------+-------+--------+-------+ | OS | tool | rand_r | seq_r | rand_w | seq_w | +-----------+------------+--------+-------+--------+-------+ | WS2016 | rbd-wnbd | 854 | 925 | 807 | 802 | | WS2019 | rbd-wnbd | 1317 | 1320 | 1512 | 1549 | | WS2019 | iscsi-gw | 324 | 319 | 624 | 635 | | Ubuntu 20 | krbd | 696 | 669 | 659 | 668 | | Ubuntu 20 | rbd-nbd | 559 | 567 | 372 | 407 | | | | | | | | | WS2016 | ceph-dokan | 642 | 605 | 690 | 676 | | WS2019 | ceph-dokan | 988 | 948 | 938 | 935 | | Ubuntu 20 | ceph-fuse | 162 | 181 | 121 | 138 | | Ubuntu 20 | kern ceph | 687 | 663 | 677 | 674 | +-----------+------------+--------+-------+--------+-------+ |

It is worth mentioning that RBD caching has been disabled. As we can see, Windows Server 2019 manages to deliver impressive IO throughput. Windows Server 2016 isn’t as fast but it still manages to outperform the Linux RBD clients, including krbd. We are seeing the same pattern on the CephFS side.

We’ve tested the iSCSI gateway with an all-in-one Ceph cluster. The performance bottleneck is likely to become more severe with larger Ceph clusters, considering that the iSCSI gateway doesn’t scale very well.

Virtual Machines

Providing virtual machine block storage is also one of the main Ceph use cases. Here are the test results for Hyper-V Server 2019 and KVM on Ubuntu 20.04, both running Ubuntu 20.04 and Windows Server 2019 VMs booted from RBD images.

|

1 2 3 4 5 6 7 8 |

+-----------------------+--------------+--------------+ | Hypervisor \ Guest OS | WS 2019 | Ubuntu 20.04 | +-----------------------+------+-------+------+-------+ | | read | write | read | write | +-----------------------+------+-------+------+-------+ | Hyper-V | 1242 | 1697 | 382 | 291 | | KVM | 947 | 558 | 539 | 321 | +-----------------------+------+-------+------+-------+ |

The WS 2019 Hyper-V VM managed to get almost native IO speed. What’s interesting is that it managed to fare better than the Ubuntu guest even on KVM, which is probably worth investigating.

WNBD

As stated in the previous post, our initial approach was to attach RBD images using the NBD protocol. That didn’t deliver the performance that we were hoping for, mostly due to the Winsock Kernel (WSK) framework, which is why we implemented from scratch a more efficient IO channel. For convenience, you can still use WNBD as a standalone NBD client for other purposes, in which case you may be interested to knowing how well it performs. It manages to deliver 933MB/s on WS 2019 and 280MB/s on WS 2016 in this test environment.

At the moment, rbd-wnbd uses DeviceIoControl to retrieve IO requests and send IO replies back to the WNBD driver, which is also known as inverted calls. Unlike the RBD NBD server, libwnbd allows adjusting the number of IO dispatch workers. The following table shows how the number of workers impacts performance. Keep in mind that in this specific case, we are benchmarking the driver connection, so no data gets transmitted from / to the Ceph cluster. This gives us a glimpse of the maximum theoretical bandwidth that WNBD could provide, fully saturating the available CPUs:

|

1 2 3 4 5 6 7 8 9 10 11 |

+---------+------------------+ | Workers | Bandwidth (MB/s) | +---------+------------------+ | 1 | 1518 | | 2 | 2917 | | 3 | 4240 | | 4 | 5496 | | 8 | 11059 | | 16 | 13209 | | 32 | 12390 | +---------+------------------+ |

RBD commands

Apart from IO performance, we were also interested in making sure that a large amount of disks can be managed at the same time. For this reason, we wrote a simple Python script that creates a temporary image, attaches it to the host, performs various IO operations and then cleans it up. Here are the test results for 1000 iterations, 50 at a time. This test was essential in improving RBD performance and stability.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

+---------------------------------------------------------------------------------+ | Duration (s) | +--------------------------+----------+----------+-----------+----------+---------+ | function | min | max | total | mean | std_dev | +--------------------------+----------+----------+-----------+----------+---------+ | TestRunner.run | 283.5339 | 283.5339 | 283.5339 | 283.5339 | 0.0000 | | RbdTest.initialize | 0.3906 | 10.4063 | 3483.5180 | 3.4835 | 1.5909 | | RbdImage.create | 0.0938 | 5.5157 | 662.8653 | 0.6629 | 0.6021 | | RbdImage.map | 0.2656 | 9.3126 | 2820.6527 | 2.8207 | 1.5056 | | RbdImage.get_disk_number | 0.0625 | 8.3751 | 1888.0343 | 1.8880 | 1.5171 | | RbdImage._wait_for_disk | 0.0000 | 2.0156 | 39.1411 | 0.0391 | 0.1209 | | RbdFioTest.run | 2.3125 | 13.4532 | 8450.7165 | 8.4507 | 2.2068 | | RbdImage.unmap | 0.0781 | 6.6719 | 1031.3077 | 1.0313 | 0.7988 | | RbdImage.remove | 0.1406 | 8.6563 | 977.1185 | 0.9771 | 0.8341 | +--------------------------+----------+----------+-----------+----------+---------+ |

I hope you enjoyed this post. Don’t take those results for granted, feel free to run your own tests and let us know what you think!

Coming next

Stay tuned for the next part of this series, if you want to learn more about how Ceph integrates with OpenStack and Hyper-V.